The evolution of the HDMI standard has come a long way. And it has roughly paralleled the evolution of personal computers and digital devices such as game consoles.

Connecting a computer (or other digital devices) to a display has come a long way since the Apple and Radio Shack TRS-80, both introduced in 1979, with both featuring composite video output. This approach is analog and combines all video information into a single channel. Both are fed into the composite inputs of the TV or monitor and are capable of working with composite video. The original TRS-80 was monochrome only and offered a maximum resolution of 64 x 16 characters. The Apple // had three video modes-40 x 24 character text mode, 40 x 48 pixel with 16 colors in low-resolution Graphic mode, and 280 x 192 pixels with 6 colors in High Resolution Graphics mode. Before these consumer-oriented computer became popular, there were several competing video standards. One of the first personal computers, The Altair 8080 had no embedded video, though several third-party vendors came up with video solution. The SOL-20, introduced in 1976 was another early PC, and offered composite monochrome output with a resolution of 64 x 16.

The Commodore C64, introduced in 1982, raised the ante somewhat. It offered composite color video with advanced color and monochrome video capability for the time. It also provided RCA jack output, am RF Modulator which enable the computer to be connected to a TV set, and S-Video output though a DIN connector which provided the highest resolution and performance.

When the IBM PC was introduced (as well as IBM PC compatibles), video became serious, with both resolution and bandwidth ramping up. IBM’s first video standard was MDA (Monochrome Display Adapter), which was a text-only output. It was followed in 1981 by the CGA (Color Graphics Adapter), which provided 320 x 200 pixels in 4 colors, and 640 x 200 pixels in 2 colors. This became the standard resolution for several years. CGA was followed by EGA (Enhanced Graphics Adapter), VGA Video Graphics Array) XGA (Extended Graphics Array) and SVGA (Super Video Graphics Array), each offering higher resolution and color depth needed for graphic-oriented work, business applications, and game playing. All of these graphic adapters were analog.

In 1999, the Digital Display Working Group introduced a new video standard-DVI (Digital Visual Interface). DVI comes in three flavors-DVI-D, DVI-A, and DVI-I. DVI-D is a digital only output, and is usually the interface used to connect to an LCD display. DVI-A is an analog only interface, and is used to connect to older displays such as a CRT. DVI-I is an integrated interface transmitting both digital and analog signals. DVI digital output provides a higher quality and higher resolution image. DVI can connect to an HDMI input with the use of an adapter. DVI was an important step forward, introducing digital video. But it didn’t transmit audio. That needed a separate cable to an audio output on the computer of on a sound card.

What is HDMI?

As good as DVI was for many things, as computers became more powerful, and display devices such as televisions and monitors started to offer higher resolution and sound with built-in speakers, DVI became a roadblock. The computer was able to generate high-resolutions necessary for higher-end applications such as watching videos, gaming, and streaming. And the displays, both monitors and TVs, also improved, being able to produce a more realistic color, and smooth motion. Something better than DVI was needed. That something was HDMI. Today, you might possibly see a PC with a VGA output, but it’s pretty rare. Current PCs (and many other video devices) use the HDMI standard.

HDMI -High Definition Multimedia Interface- was introduced in 2002 and was the next generation in digital interfaces. It increased the resolution of both video and audio, and was able to transmit both over its single cable. In addition to its use in computer displays and higher definition audio, HDMI was quickly incorporated into other devices requiring video and audio as well when required such as gaming consoles. The 1.0 release of HDMI featured a maximum resolution of 1080p at a 60Hz refresh rate and a maximum bandwidth of 4.95 Gbps. On the audio side of the equation, HDMI 1.0 was able to support up to 8 channels of uncompressed audio at 192 kHz at 24-bit resolution, allowing a much more realistic reproduction of audio.

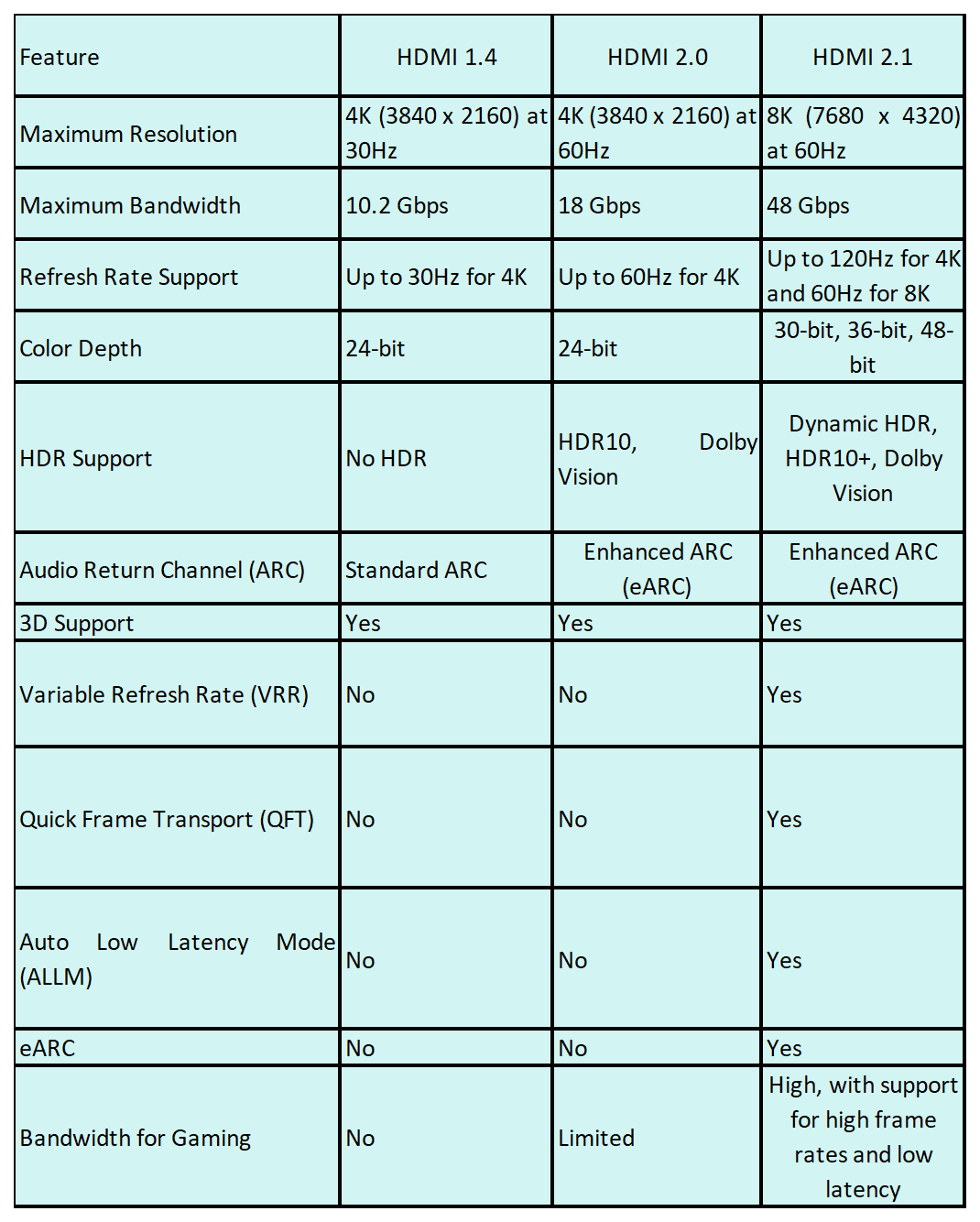

2009 saw the release of HDMI 1.4. This release provided 4K video resolution, with a maximum of 4096 x 2160 pixels at 30Hz. This release also added an Audio Return Channel (RC) which allows audio to be sent from a display device such as a TV, to an audio device such as a sound bar, eliminating the need for an additional cable. It’s important to know that all of the previous standards with unidirectional, with data flowing from the source, such as a computer or game console, to the display device. HDMI 1.4 introduced bidirectionality, with ARC providing the ability to function in both directions.

A New Generation

HDMI received a significant upgrade in 2013 with the release of HDMI 2.0. This release upped the support to 4K resolution at 60Hz and provided a maximum resolution of 4096 x 2160 pixels at 60Hz. Bandwidth was also increased from 10.2Gps in HDMI 1.4 to 18Gps. Also improving the quality of video was the introduction of High Dynamic Rage (HDR). This allows the monitor of V to display more colors and tints, providing a higher-quality image. HDR also improves the contrast between light and dark areas of the image. 2.0 also introduced dual video streams, which can transmit two separate video streams to a display device. This permits better multitasking, game-playing, and picture-in-a-picture display.

The most recent release of HDMI is HDMI 2.1. Introduced in 2017m this is a significant enhancement over previous releases. The maximum regulation jumped to 10K support (10240 x 4320) at 120 Hz, and 8K (7680 x 4320) at 60 Hz. The very high resolution and refresh rates provided significant improvements in gameplay, and provided support for 8K televisions. Bandwidth also improved to 48 Gbps, though achieving this bandwidth requires the use of an ultra-high-speed HDMI cable. Also enhanced were HDR and ARC. HDR was improved to Dynamic HDR to support new dynamic HDR formats including Dolby Vision and HDR10+. This allows HDR to be adjusted on the fly scene-by-scene or frame-by-frame improving the correlation between the picture and the audio. Further improving audio performance is eARC (enhanced ACR), which supports higher-quality audio formats like Dolby Atmos and DTS.

HDMI 2.1 also provides Variable Refresh Rate (VRR) which reduces screen tearing and stuttering. This is most helpful in gaming. Also helpful for gaming is Auto Low Latency Mode (ALLM) which switches to low-latency mode for gaming.

Which One Is The Best for Me?

Upgrading from HDMI 1.4 (or earlier) to HDMI 2.0 is a no-brainer, assuming that both your computer and display device will support the enhanced capability of the move. You will need to buy new HDMI cables that can handle the higher data rates and bandwidth as well as the pin configuration on each, but the cost of these is fairly inconsequential. Most devices these days, from PCs, to Blu-ray players, to game consoles, support at least HDMI 2.0, so it’s a good upgrade. You won’t have to change a video driver, the upgrade to the newer version of HDMI is solely a hardware one. Assuming that the computer and the display device both support HDMI 2.0, just changing the cable should enable the switchover. Cables certified for HDMI 1.4 can be used with HDMI 2.0 compatible devices, but they won’t offer the enhancement HDMI 2.0 provides. You may also have to make adjustments to the settings of the display device, though. These might be resolution, color depth, audio, and if there is a setting for High Dynamic Range (HDR) it may need to be enabled. Adding a soundbar will pretty much necessitate using HDMI 2.0 or 2.1 because ARC isn’t supported in HDMI 1.4. You also need to make sure that the content you want to view is capable of taking advantage of the better video and audio capabilities.

The move from 1.4 or 2.1 is a bit more complicated. First, you need to know if your hardware can make use of the advanced features. HDMI 2.1 displays are becoming more popular, but if you have no intention of taking advantage of these features, you are probably better off staying with HDMI 2.0 for the time being. There’s also the consideration of the display capabilities on the computer or game device. The graphics capabilities of a several-year-old PC may not support HDMI 2.0. You may be able to add this capability by replacing the graphics card (assuming your PC has one) with a new card that supports HDMI 2.1.

Leave a comment

This site is protected by hCaptcha and the hCaptcha Privacy Policy and Terms of Service apply.